TracNav menu

-

System Links

- Edit Wiki Text to URL mappings

-

Dayabay Search

- swish dyb search

-

Offline User Manual, OUM (auto updated by build slaves)

- BNL

- NUU often updated ~hrs before BNL

- NTU usually outdated, used for testing

-

IHEP repositories

- dybsvn:/

- dybaux

- image gallery

-

NTU repositories

- env:/

- tracdev:/

- aberdeen:/

-

DB interfaces

- ODM DBI Records

- optical/radioactivity measurements

- http://dayabay.ihep.ac.cn/dbi/

- http://web.dyb.ihep.ac.cn/phpMyAdmin/ retired?

- http://dybdb1.ihep.ac.cn/phpMyAdmin/index.php

- http://dcs2.dyb.ihep.ac.cn/index.php

-

Monitoring

- DQ Comments

- dybruns

- PQM

- dybprod_temp

- //e/scm/monitor/ihep/

- doc:5050 DAQ dryrun runlist

-

Documentation

- BNL Wiki Offline Documentation

-

Doxygen Style Documentation

- NuWaDoxygen

- caltech Doxygen

-

Mail Archives

- offline sympa archive

- simulation sympa archive

- gaudi-talk

-

Chat Logs

- caltech ChatLogs

-

Help

- NuWa_Trac

- Testing_Quickstart

-

BNL copies

- db:NuWa_Trac

- db:Testing_Quickstart

-

PDSF

- warehouse

-

ELogs

- LBL elog:/

- LBL elog:Antineutrino_Detectors/

- LBL elog:MDC/

- IHEP http://dayabay.ihep.ac.cn:8099/

- OnSite http://web.dyb.ihep.ac.cn:8099/

-

Photo Galleries

- IHEP Gallery

-

Calendars

- Google Calendar

- DocDB Calendar

-

Dayabay Shifts

- Daya Bay Shifter Home Page

- Shift Scheduling

- doc:7487 Shift Starters Guide

- twiki:Shift

- twiki:ShiftTraining

- twiki:ShiftCheck

- http://web.dyb.ihep.ac.cn:8099/Shift/

- BNL Shifting page Outdated BNL wiki page

-

Dayabay Wikis

- BNL public wiki timeline

- BNL private wiki timeline

- IHEP external twiki

- IHEP Internal TWiki

-

Dayabay Collaboration

- Conferences List

- Institute Map

-

DocDB

- DocDB

-

Dayabay Nightly

- dybinst-nightly

- Nightly-manual.pdf

-

IHEP Wiki Pages

- ADDryRunGroup

-

BNL Wiki Pages

- db:Offline_Documentation

- db:SVN_Statistics

- db_:SVN

- db:Help:Contents

- db:Special:Recentchanges

- dbp:Special:Recentchanges

- dbp:SimulationGroup

- dbp:UserManual

Testing Quickstart

Overview

The automated testing system uses the Bitten extension to Trac which provides a slave and master system :

slave any machine capable of running NuWa, with nosebit installed master dybsvn Trac instance http://dayabay.ihep.ac.cn/tracs/dybsvn

nosebit

nosebit denotes a collection of packages :

nose test discovery/running, orchestration with coverage and profilers setuptools needed to install nose plugins, also provides easy_install tool xmlnose nose plugin to provide test results in the xml format needed by the bitten master bitten-slave from bitten automated build/test runner, pure python

Which provide command line tools:

nosetests test running entry point easy_install install python packages into NuWa python bitten-slave communicates with bitten-master for instructions on tests (or builds) to be performed

Installation of nosebit into Nuwa python

Since r4360, nosebit is included with the dybinst externals, so the below installation should have been done automatically by dybinst, (if you find otherwise check ticket #19 ).

If which nosetests draws a blank when you are in your CMT environment then you(or your administrator) will need to rerun dybinst with commands like :

./dybinst trunk checkout ./dybinst trunk external nosebit

to get uptodate and install the nosebit external.

Check the nosebit installation

Check your installation by getting into the CMT controlled environment of any package that uses python, and trying these tools:

dyb__ ## see below for details which nosetests ## also check easy_install and bitten-slave

The path returned should be beneath the external/Python directory of your NuWa installation. Verify that the list of plugins includes xml-output (used by automated testing system) :

nosetests --plugins | grep xml-output Plugin xml-output

Utility dyb__* functions

These utility functions are used by the automated build and test system, so to investigate failures it is best to use these functions first to reproduce the failures, then proceed with using nosetests directly as you zero in on the failing tests.

Define the dyb__* functions in your environment with something like the below called from your $HOME/.bash_profile

. $NUWA_HOME/../installation/trunk/dybtest/scripts/dyb__.sh

Use tab-completion in your bash shell to see the functions available :

dyb__<tab>

Check your bash , if nothing is returned by the below function then your bash does not need the workaround of setting environment variable NUWA_HOME

dyb__old_bash

Customize the defaults by overriding the dyb__buildpath function to feature your favourite repository path, for example by putting the below into your $HOME/.bash_profile. See the below section on bash functions if they are new to you.

dyb__buildpath(){ echo ${BUILD_PATH:-dybgaudi/trunk/Simulation/GenTools} ; }

OR :

dyb__buildpath(){ echo ${BUILD_PATH:-dybgaudi/trunk/DybRelease} ; }

- use BUILD_PATH if defined, otherwise the default path provided

- CAUTION: you must provide a repository path (with the trunk ) not a working copy path

dyb__usage

The dyb__usage function provides brief help on the most important functions :

dyb__usage

Top level functions provided :

dyb__ [siteroot-relative-path]

path defaults to dybgaudi/Simulation/GenTools

jump into CMT controlled environment

and wc directory of a siteroot relative path,

the relative path must either end with "cmt" or with

a directory that contains the "cmt" directory

eg

dyb__ lcgcmt/LCG_Interfaces/ROOT/cmt

dyb__ lcgcmt/LCG_Interfaces/ROOT

dyb__ dybgaudi/DybRelease

dyb__update

uses dybinst to svn update and rebuild

dyb__checkout : ./dybinst trunk checkout

dyb__rebuild : ./dybinst -c trunk projects

dyb__test [options-passed-to-nosetests]

uses dyb__context to setup the environment and directory

then invokes nosetests with the options passed

Nose test running

Get into the CMT managed environment of your default build path and run nosetests with the function :

dyb__test [arguments-are-passed-directly-to-nosetests]

The automated tests are run from the package directory, that is the parent directory of the cmt directory. Currently a simple nosetests invocation is performed, changing this simplest approach to avoid issues with test isolation may be necessary in future.

If you are already in the appropriate environment you can run tests with nosetests directly.

nosetests basic usage

Search for tests using the default test finding approach in the current directory, with command :

nosetests

For your tests to be found,

- collect them in the tests folder of your project folder

- name the python modules test_*.py

- name the test functions test_*

See the examples of tests in

You can also run the tests from specific directories or modules with eg

nosetests tests/test_look.py

nosetests options

Nosetests has a large number of options, see them listed with :

nosetests --help

Some of the most useful ones are :

-v / -vv / -vvv verbosity control, default is very terse just a "." for a successful test -s / --no-capture stdout/stderr for failing tests is usually captured and output at the end of the test run, use this to output immediately

nose documentation

nosetests is the command line tool that exposes the functionality of the nose python package. Access the pydoc for the nose package with :

pydoc nose

Or see it online at

Interactive Test Running

Note the trunk in the BUILD_PATH, as it is a repository path :

. $NUWA_HOME/../installation/trunk/dybtest/scripts/dyb__.sh ## defines the dyb__* functions export BUILD_PATH=dybgaudi/trunk/RootIO/RootIOTest dyb__test -v

- the -v is a verbosity option passed to nosetests

Example of running RootIO tests

[dayabaysoft@grid1 dayabaysoft]$ export BUILD_PATH=dybgaudi/trunk/RootIO/RootIOTest

[dayabaysoft@grid1 dayabaysoft]$ dyb__test -v

=== dyb__test : invoking context... [-v] from /home/dayabaysoft

------------------------------------------

Configuring environment for standalone package.

CMT version v1r20p20070720.

System is Linux-i686

------------------------------------------

Creating setup scripts.

Creating cleanup scripts.

BUILD_PATH=dybgaudi/trunk/RootIO/RootIOTest

NUWA_HOME=/disk/d3/dayabay/local/dyb/trunk_dbg/NuWa-trunk

=== dyb__test : invoking clean... [-v] from /disk/d3/dayabay/local/dyb/trunk_dbg/NuWa-trunk/dybgaudi/RootIO/RootIOTest

=== dyb__test : invoking nosetests... [-v] from /disk/d3/dayabay/local/dyb/trunk_dbg/NuWa-trunk/dybgaudi/RootIO/RootIOTest

test_io.test_genio ... FAIL

test_io.test_rootio ... ok

test_io.test_simio ... FAIL

test_io.test_dybio ... ok

======================================================================

FAIL: test_io.test_genio

----------------------------------------------------------------------

Traceback (most recent call last):

File "/disk/d3/dayabay/local/dyb/trunk_dbg/external/Python/2.5.2/slc3_i686_gcc323/lib/python2.5/site-packages/nose-0.10.3-py2.5.egg/nose/case.py", line 182, in runTest

self.test(*self.arg)

File "/disk/d3/dayabay/local/dyb/trunk_dbg/NuWa-trunk/dybgaudi/RootIO/RootIOTest/tests/test_io.py", line 18, in test_genio

Run( "python share/geniotest.py output" , parser=m , opts=opts )().assert_()

File "/disk/d3/dayabay/local/dyb/trunk_dbg/installation/trunk/dybtest/python/dybtest/run.py", line 48, in assert_

assert self.prc == 0 , self

AssertionError: <Run "<CommandLine "python share/geniotest.py output" rc:0 duration:19 [] >" opts:{'maxtime': 300, 'timeout': -1.0, 'verbose': True} prc:1 parser:<Matcher {'.*ERROR': 1,

'.*FATAL': 2,

'.*\\*\\*\\* Break \\*\\*\\* segmentation violation': 3,

'^\\#\\d': None} > >

-------------------- >> begin captured stdout << ---------------------

forking <Run "<CommandLine "python share/geniotest.py output" rc:None duration:None [] >" opts:{'maxtime': 300, 'timeout': -1.0, 'verbose': True} prc:0 parser:<Matcher {'.*ERROR': 1,

'.*FATAL': 2,

'.*\\*\\*\\* Break \\*\\*\\* segmentation violation': 3,

'^\\#\\d': None} > >

[1] XmlCnvSvc ERROR Expression evaluation error: UNKNOWN_VARIABLE

[1] XmlCnvSvc ERROR [ADoavHeight/2.+ReflectorOffset]

[1] XmlCnvSvc ERROR Expression evaluation error: UNKNOWN_VARIABLE

[1] XmlCnvSvc ERROR [-ADoavHeight/2.-ReflectorOffset]

[1] XmlCnvSvc ERROR ^

subprocess returned ... <CommandLine "python share/geniotest.py output" rc:0 duration:19 [] >

completed <Run "<CommandLine "python share/geniotest.py output" rc:0 duration:19 [] >" opts:{'maxtime': 300, 'timeout': -1.0, 'verbose': True} prc:1 parser:<Matcher {'.*ERROR': 1,

'.*FATAL': 2,

'.*\\*\\*\\* Break \\*\\*\\* segmentation violation': 3,

'^\\#\\d': None} > >

Automated Build/Test Running

The results of automated testing are reported by the Bitten Trac plugin at

- i:build summary of all configurations

- i:build/dybinst details for the dybinst configuration

Some documentation on test triggering criteria are given on the above pages.

Adding a script to automated testing

Create the test

- Create a tests directory as sibling to the cmt directory of the project

- Add a interface script at path tests/test_myscript.py

- NB the directory must be called tests and the filename must be of form test_*.py

Example of tests/test_myscript.py :

from dybtest import Matcher, Run """ Example of interfacing : "python share/myscript.py myarg1 myarg2" into the automated testing system, without modifcation to the script. The script is run in a subprocess and the stdout/stderr is piped out and examined by the matcher using python regular expressions that are matched against every line of output providing line return codes when a match is found. The maximum return code from all the lines of output is the matcher return code, which if greater than zero causes the test to fail If the running time of subprocess script exceeds the configured maxtime (in seconds), the subprocess is killed. """ checks = { '.*FATAL':2, '.*ERROR':1, '.*\*\*\* Break \*\*\* segmentation violation':3, '^\#\d':None } m = Matcher( checks, verbose=False ) opts = { 'maxtime':300 } def test_myscript(): Run( "python share/myscript.py myarg1 myarg2" , parser=m , opts=opts )().assert_() if __name__=='__main__': test_myscript()

This uses functionality provided in i:source:installation/trunk/dybtest/python/dybtest which is made globally available via nosebit_dybtest.

Introduce the test to the automated testing system

- Add a step element to the relevant recipes :

<step id="test-myproject" description="test-myproject" onerror="continue" > <sh:exec executable="bash" output="test-myproject.out" args=" -c " &env; export BUILD_TEST=myproject ; export BUILD_PATH=dybgaudi/trunk/Simulation/MyProject ; &test; " " /> <python:unittest file="test-myproject.xml" /> </step>

Note that two variables must be exported :

- BUILD_TEST : name of test that must match that used in the python:unittest element

- BUILD_PATH : repository path (including the trunk) of your project

To follow what is happening examine the entity definitions at the top of the recipe.

After committing the updated recipe, all the slaves should being to perform your additional tests.

Test failures

Firstly check the status of the build at for example i:build/dybinst, look for

- consistency between slaves

- changes in the numbers of tests

Inconsistency between slaves indicates either :

- architecture dependence (unlikely for failures at test stage, not uncommon for compilation issues)

- difference between the working copies of the slave : perhaps due to a forgotten commit resulting in a conflict when someone else makes changes

Unexpected changes in the number of tests, usually indicate failure to import a python module

Switching off a single test

You can switch off a failing test by defining a __test__ attribute of the test function to be False, eg:

def test_example(): assert 0 test_example.__test__ = False

Test Admin

Bitten provides configuration pages from which build configurations or slaves can be administrated by users with BUILD_ADMIN or TRAC_ADMIN privilege.

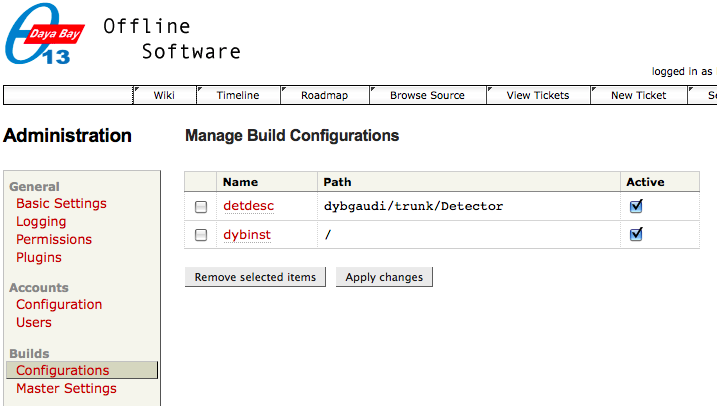

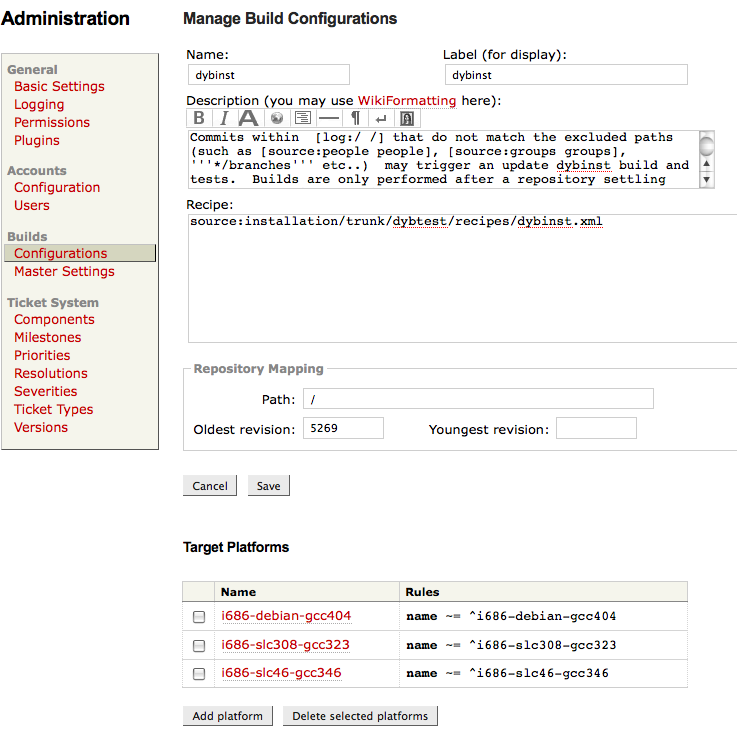

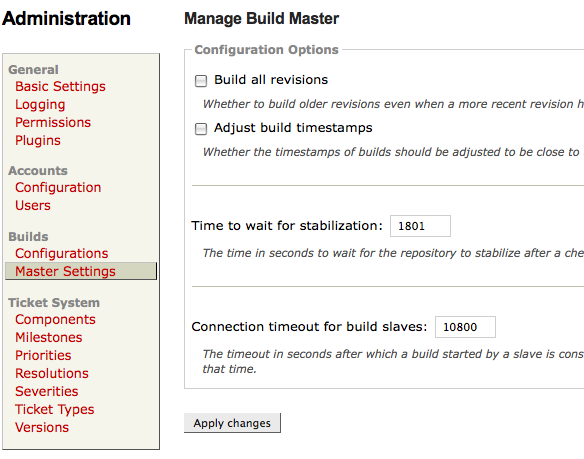

i:admin/bitten/configs enable/disable configs such as dybinst, detdesc setting slaves assigned to each etc.. i:admin/bitten/master master settings such as time to wait for stabilization etc..

Manage Build Configurations

Include/Exclude Slaves

Slaves can be removed by selecting them and using the Delete selected platforms button. They can be added simply with the Add Platform button; the current convention is simply to define the Platform identification rule based on the name of the slave

i686-debian-gcc404 BNL i686-slc308-gcc323 NTU i686-slc46-gcc346 NTU

Master Settings

A simple way to suspend all testing is to set the repository stabilization time to a high value,

current default stabilization time (seconds) 1800

Bitten recipes

The Bitten master manages XML recipes for automated building and test running. Recipes are associated with repository paths, to form the builds. Following svn checkins within the paths, builds are assigned "pending" status.

An example of a recipe :

<!DOCTYPE build [ <!ENTITY slav " export BUILD_PATH=${path} ; export BUILD_CONFIG_PATH=${path} ; export BUILD_CONFIG=${config} ; export BUILD_REVISION=${revision} ; export BUILD_NUMBER=${build} ; " > <!ENTITY nuwa " export NUWA_HOME=${nuwa.home} ; export NUWA_VERSION=${nuwa.version} ; export NUWA_SCRIPT=${nuwa.script} ; " > <!ENTITY scpt "export BUILD_PWD=$PWD ; . $NUWA_HOME/$NUWA_SCRIPT ; " > <!ENTITY env " &slav; &nuwa; &scpt; " > <!ENTITY test "dyb__test --with-xml-output --xml-outfile=$BUILD_PWD/test-$BUILD_TEST.xml --xml-baseprefix=$(dyb__relativeto $BUILD_PATH $BUILD_CONFIG_PATH)/ ; " > ]> <build xmlns:python="http://bitten.cmlenz.net/tools/python" xmlns:svn="http://bitten.cmlenz.net/tools/svn" xmlns:sh="http://bitten.cmlenz.net/tools/sh" > <step id="checkout" description="checkout" onerror="continue" > <sh:exec executable="bash" output="checkout.out" args=" -c " &env; dyb__checkout " " /> </step> <step id="external" description="external" onerror="continue" > <sh:exec executable="bash" output="external.out" args=" -c " &env; dyb__external " " /> </step> <step id="relax" description="relax" onerror="continue" > <sh:exec executable="bash" output="relax.out" args=" -c " &env; dyb__projects relax " " /> </step> <step id="gaudi" description="gaudi" onerror="continue" > <sh:exec executable="bash" output="gaudi.out" args=" -c " &env; dyb__projects gaudi " " /> </step> <step id="lhcb" description="lhcb" onerror="continue" > <sh:exec executable="bash" output="lhcb.out" args=" -c " &env; dyb__projects lhcb " " /> </step> <step id="dybgaudi" description="dybgaudi" onerror="continue" > <sh:exec executable="bash" output="dybgaudi.out" args=" -c " &env; dyb__projects dybgaudi " " /> </step> <step id="test-gentools" description="test-gentools" onerror="continue" > <sh:exec executable="bash" output="test-gentools.out" args=" -c " &env; export BUILD_TEST=gentools ; export BUILD_PATH=dybgaudi/trunk/Simulation/GenTools ; &test; " " /> <python:unittest file="test-gentools.xml" /> </step> <step id="test-rootio" description="test-rootio" onerror="continue" > <sh:exec executable="bash" output="test-rootio.out" args=" -c " &env; export BUILD_TEST=rootio ; export BUILD_PATH=dybgaudi/trunk/RootIO/RootIOTest ; &test; " " /> <python:unittest file="test-rootio.xml" /> </step>

A running Bitten slave polls the master (using HTTP) to see if there are any pending builds that it can perform. If there are it GETs the corresponding recipe and configuration parameters such as the BUILD_PATH from the master and follows the steps of the recipe reporting progress to the master after each step.

Setup/configure a bitten slave

Define some functions for configuration/control of the slave

. $NUWA_HOME/../installation/trunk/dybtest/scripts/slave.bash

slave-usage

The slave is configured using two files, one for the credentials/url with which to connect to the master $HOME/.slaverc :

#

# generated on : Thu Sep 11 17:39:04 CST 2008

# by function : slave-demorc

# from script : /data/env/local/dyb/trunk_dbg/installation/trunk/dybtest/scripts/slave.bash

#

#

# name used for logfile and tmp directory identification and identification of the

# slave on the server ... convention is to use the NEWCMTCONFIG string

#

local name=i686-slc46-gcc346

#

# "builds" url of the master trac instance and credentials with which to connect

#

#local url=http://dayabay.phys.ntu.edu.tw/tracs/dybsvn/builds

local url=http://dayabay.ihep.ac.cn/tracs/dybsvn/builds

local user=slave

local pass=youknowit

#

# absolute path to directory containing dybinst exported script

#

local home=/data/env/local/dyb/trunk_dbg

#

# absolute path to slave config file

#

local cfg=$home/installation/trunk/dybtest/recipes/cms01.cfg

And a second defining the characteristics of the NuWa installation

# # configuration for a bitten slave # # the master deals in repository paths hence have to strip # the "trunk" to get to working copy paths # [nuwa] home = /data/env/local/dyb/trunk_dbg/NuWa-trunk version = trunk script = ../installation/trunk/dybtest/scripts/dyb__.sh

The quantites defined are accessible within the recipe context as eg:

${nuwa.home}

slave running

The slave will poll the master looking for pending builds that can be performed by the slave, based on match criteria configured in the master. When a pending build is found that matches the slave, the steps of the build are done and reported back to the master. Test outcomes in XML are reported to the the master, which parses them and places into the Trac database ready for presentation.

To start the slave:

slave-start [options-passed-to-slave]

This function invokes the bitten-slave command which does the setup of working directories and configuration file access. Options to the function are passed to the command

Useful options for initial debugging :

-s, --single exit after completing a single build -n, --dry-run do not report results back to master -i SECONDS, --interval=SECONDS time to wait between requesting builds, poll interval (default 300 s)

For long term slave running, a detached screen can be used, started with :

slave-screen-start

Bash Functions Primer

Define the function :

demo-func(){

echo $FUNCNAME hello [$BASH_SOURCE]

}

Invoke it :

demo-func demo-func hello []

Show the definition :

type demo-func

demo-func is a function

demo-func ()

{

echo $FUNCNAME hello [$BASH_SOURCE]

}

Attachments

-

manage-configs.png

(54.4 kB) - added by blyth

17 years ago.

Test Config Management Page

-

add-remove-slave.png

(111.9 kB) - added by blyth

17 years ago.

Adding / Removing Slaves for a single configuration

-

master-settings.png

(76.0 kB) - added by blyth

17 years ago.

Master settings